Project overview

Person localization in an indoor environment is a crucial component in a variety of applications ranging from visual surveillance and supervision to assisted living where the common objective typically is to monitor peoples activities in target spaces. This however is a challenging problem due to complex building layouts, walls, furniture and other entities that impact the sensing of humans and their activities in a reliable way. Fortunately, there has been a surge in deployment of various types of sensors ranging from body wearable sensors to sensors placed in the near vicinity like camera sensors.

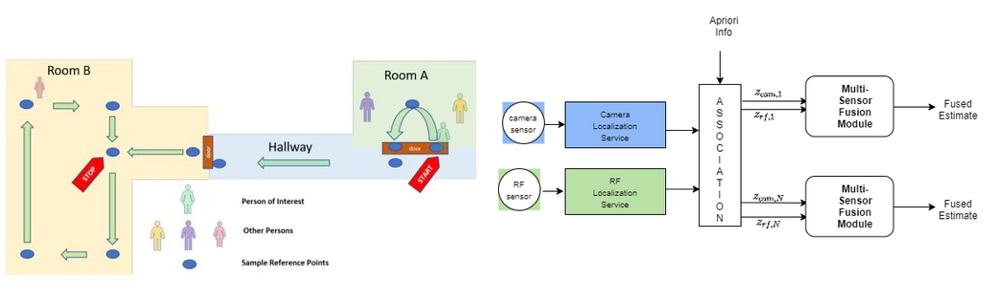

There have been numerous research studies on localization using individual sensor technologies and those based on multi sensor fusion. Specifically, there is a target space of interest equipped with dozens of camera sensors and wireless locators. People walk in the space with Radio Frequency Identification (RFID tags) and they are detected both by camera sensors and wireless locators. Both sensor systems provide estimates of location of each person in the space in real time. These estimates may or may not be available all the time.

One of the goals is to fuse the estimates in real time. Unlike previous studies, no assumptions are made regarding models of the sensor systems or when the estimates arrive or known time delay between estimates. Another challenge this work address is data association. Typically there are multiple persons or objects of interest in a space and the association of estimates with the target for each modality needs to be known. This is especially a difficult problem when it comes to camera sensors where after detection of a person in an image takes place, determining which RFID this person is to be associated with is not straightforward.