Immersion, interactivity, cloudification, M2M and AI: 5 trends in future video usage

Digital media is undergoing a rapid evolution – an evolution that addresses emerging technology trends, consumer behaviors and industry applications.

Today, video seems to be everywhere! Consumers are spending more time streaming and sharing video. Video is embedded in all types of online content – in news, ads and social media – and we are increasingly switching to video-on-demand streaming services.

Our media consumption behavior is changing too, as video is being watched anywhere and anytime on our smart devices. It should come as no surprise that nearly three-quarters of mobile traffic today is video. Traditional passive media consumption is being challenged by emerging immersive media formats and applications that introduce new interactive experiences with augmented and virtual reality. These new technologies, too, will increasingly expand from entertainment to communications as an eco-friendly and safe way to connect people. The recent global pandemic has only accelerated those trends. According to a Nokia Deepfield analysis of internet usage patterns in the time of COVID-19, every manner of video application is experiencing sizable increases in usage. In Europe, there was a 13% increase in YouTube traffic and a 58% increase in Netflix streaming the week after shelter-at-home orders went to affect. But the most astonishing rise came from video communications. Nokia measured a 350% increase in traffic across all video conferencing apps in Europe, and Skype session traffic grew by 304%.

Finally, we believe that soon half of global video traffic will never be seen by humans. Rather machines connected to internet of things – autonomous cars, smart surveillance cameras and industrial robots – will use video to sense and analyze their environments to fulfill automated tasks, only alerting humans when specific incidents require attention.

Throughout video’s rapid rise to prominence, Nokia has been on the forefront of its technological development and standardization. For the last 30 years, Nokia has been innovating the technologies that allow audio and video to traverse communications networks. And over that same period we have contributed to audio and video standards in the Moving Picture Experts Group (MPEG) of ISO/IEC, the Telecommunication Standardization Sector of the ITU and in the 3rd Generation Partnership Project (3GPP), guaranteeing interoperability between devices and services while pioneering new audio and video compression technologies and new media formats and systems.

Given Nokia’s position on the nexus of video technology and transport and its large role in developing video media standards, we feel we’re uniquely qualified to make predictions about its future. Nokia believes the future of video technology development and adoption will be built on five pillars: immersion, interactivity, cloudification, machine-to-machine communications and intelligence.

Immersive media services delivering a feeling of presence

The entertainment industry and consumers are continuously looking for more compelling media experiences. Immersive media aims at providing users with a strong feeling of presence, for example the use of ultra-high definition and high dynamic range for more realistic presentation of content in terms of contrast and color. New emerging content experiences, such as game streaming, 360-degree video streaming and ultra-low latency video applications, pose unique demands. Those requirements are being met in the ongoing Versatile Video Coding (VVC/H.266) standard, which will also deliver immersive video to mobiles, tablets and TVs even when the available network bandwidth is limited.

The previous MPEG and ITU-T Advanced Video Coding (AVC/H.264) and High Efficiency Video Coding (HEVC/H.265) standards – for high-definition video and ultra-high-definition 4K video respectively -- brought Nokia and its standardization partners two Emmy Engineering Awards. Those standards are incorporated into more than 2 billion devices, covering virtually every smartphone, personal computer, television, digital video player and consumer camera. Those technologies are implemented in communication and broadcasting networks and in media cloud services. Considering how ubiquitous the H.264 and H.265 codecs have become, we expect the H.266 codec to have an enormous impact, bringing truly immersive experiences to countless consumer, enterprise and industrial applications around the world.

Interactive media driving remote collaboration in the cloud

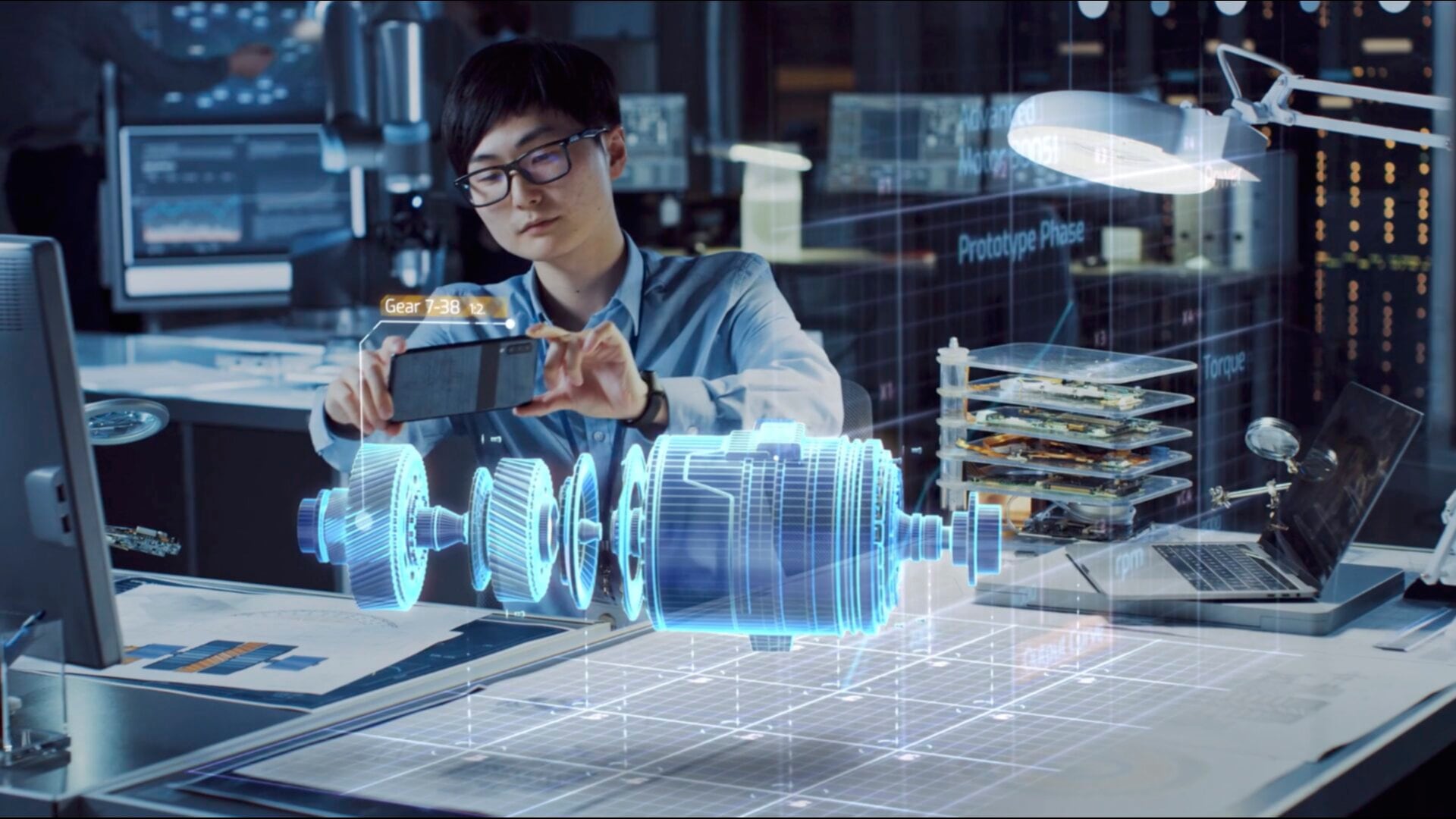

While perceived image and video quality strongly contributes to immersion, immersive media experiences are also driven by the capability to interact with the content enabled by ultra-low-delay connections to the edge cloud. The growing popularity of augmented and virtual reality services has contributed to the high interest in capturing the real world digitally in three dimensions and distributing such volumetric representations to users, thus enabling them to freely navigate this digital representation. Nokia continues to drive the Omnidirectional Media Format (OMAF) systems standard in MPEG as an interoperable way to store, stream and playback 360-degree multimedia content for virtual reality applications. Particularly OMAF’s viewport-dependent streaming can efficiently deliver ultra-high definition 360-video to ease remote collaboration through immersive videoconferencing. Moreover, the developing MPEG Video-based Point Cloud Coding standard has initiated the transition toward compressed volumetric video for free-viewpoint augmented reality experiences.

In parallel to interactive video, Nokia’s driving the international standardization of Immersive Voice and Audio Services (IVAS) in 3GPP that will introduce a new dimension to mobile communications in 5G – spatial audio. Now, consumers will be able to use their smartphones to capture the entire audio fidelity and depth of a music concert and deliver that vivid experience to others live over 5G. Alternatively, IVAS is the perfect tool for enterprises to enrich remote collaboration by placing participants’ voices around the same virtual table in immersive teleconferencing.

Content is becoming more complex with the emergence of new immersive multimedia experiences, so the media services and applications delivering these experiences need to address ever more complex tasks through media processing. To accomplishment that, we need to turn to the cloud. Today’s smartphones are powerful media processing devices, but network-based media processing can bring even the most complex, time-critical augmented and virtual reality experiences to any connected device. Nokia has pioneered the MPEG Network-based Media Processing (NBMP) standard to provide developers with well-defined interfaces to perform media processing as unified workflows across smartphones, the edge cloud and the centralized cloud.

Machine-to-machine communication and shared intelligence

The rapidly growing number of connected IoT devices and the increase in deployment of video applications on these connections – such as smart city surveillance (face recognition) and car navigation and factory automation (defect detection and grading) – means video is increasingly consumed by machines today. In distributed applications like these, visual sensor data must be efficiently delivered over communication networks for centralized analysis by artificial intelligence (AI) in the cloud. Nokia is actively contributing to MPEG’s on-going work on Video Coding for Machines that aims at a collaborative approach to compress video, as well as visual features extracted from that video, for both human monitoring and machine vision tasks.

Deep neural networks will become the basis for media analytics in these machine-to-machine applications – AI-powered machine vision will automate countless task relying on a human operator only as backup. But there are currently some big limitations to this approach. The high number of parameters or weights required for machine-vision analysis set strict requirements for computing capacity and memory, which makes their integration difficult in resource-constrained devices like surveillance cameras. To extend AI to these kinds of devices, strong compression is required. Moreover, the distribution of deep neural networks over communication networks to a large number of independently operating IoT devices – for instance autonomous vehicles – poses a challenge. MPEG and Nokia are currently tackling these challenges by developing technologies and an open standard for the Compression of Neural Networks for Multimedia Content Description and Analysis (MPEG-NNR). This standard for the compressed representation of neural networks will ensure interoperability with inference environments between different platforms or between the applications of different manufacturers.

Video is everywhere

Digital media is undergoing a rapid evolution well beyond the traditional streaming of content to the living room television. Content is becoming more immersive and media experiences interactive while more and more machines join humans in the consumption of video. Overall, requirements for the efficient compression and low-latency transmission of video stay high. Nokia has a long background in driving technologies and open standardization for interoperable media applications and services, and we will continue to play a key role in building those technologies and influencing those standards in the future.